IT Home December 13 news, Meta announced on Thursday the launch of an artificial intelligence model called Meta Motivo, which is designed to control the actions of humanoid digital intelligent bodies to enhance the metaverse experience.

Meta also released AI tools such as the large concept model LCM and the video watermarking tool Video Seal, and reiterated its determination to continue investing in AI, AR and metaverse technologies.

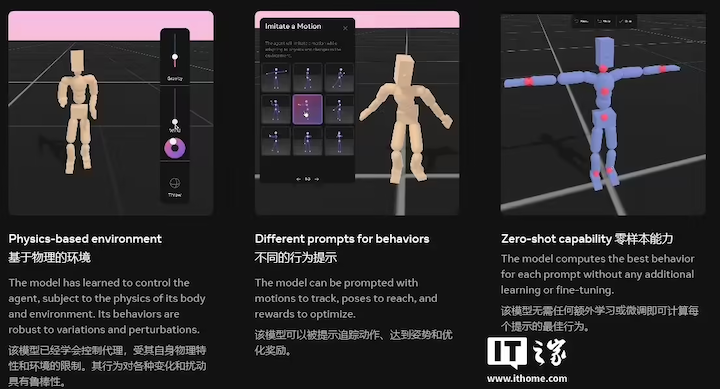

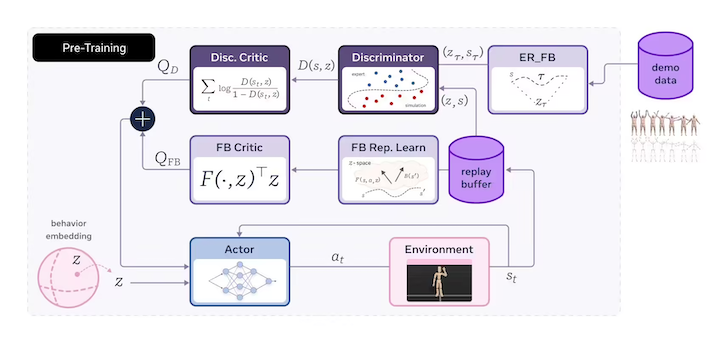

IT Home Note: Meta Motivo is a behavior-based basic model, trained in the Mujoco simulator, using a subset of the AMASS motion capture dataset and 30 million online interaction samples, and pre-trained through a new unsupervised reinforcement learning algorithm to control the movement of complex virtual humanoid agents.

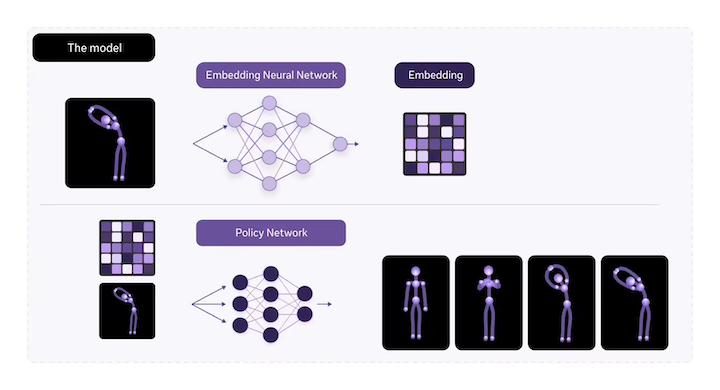

Meta Motivo is trained using a novel FB-CPR algorithm that leverages unlabeled action datasets to guide unsupervised reinforcement learning toward learning human-like behaviors while retaining zero-shot reasoning capabilities.

Although the model is not explicitly trained on any specific task, during pre-training, performance on tasks such as motion trajectory tracking (e.g. cartwheels), pose reaching (e.g. arabesque dancing), and reward optimization (e.g. running) is improved, showing more human-like behavior.

The key technical innovation of the algorithm lies in learning representations that embed states, actions, and rewards into the same latent space. As a result, Meta Motivo is able to solve a variety of full-body control tasks, including motion tracking, target pose reaching, and reward optimization, without any additional training or planning.

Reference: